Artificial neural networks (ANNs) have become one of the most talked about technologies in recent years. But what exactly are they and how do they work? This article aims to explain artificial neural networks in simple terms looking at what they are, how they operate their history, and some real-world applications.

What is an Artificial Neural Network?

An artificial neural network is a type of machine learning algorithm modeled after the human brain’s biological neural networks, The key components of ANNs include

-

Input Layer – The input layer receives the data and passes it on to the network for processing. For example image pixels or text data.

-

Hidden Layers – The hidden layers are the intermediate layers between the input and output. This is where most of the computational heavy lifting is done through a series of mathematical operations. Multiple hidden layers allow the network to learn more complex relationships.

-

Output Layer – The output layer produces the final output of the network. For example, predicting whether an image contains a cat or assigning a sentiment score to movie review text.

-

Connections – Neurons between layers are connected to each other, each connection with an associated weight. The weights are adjusted as the network is trained to improve accuracy.

-

Activation Function – The activation function transforms the weighted sum of the input into the output from that node. Common activation functions include sigmoid, tanh, and ReLU.

How Do Artificial Neural Networks Work?

Artificial neural networks work by mimicking how the human brain’s network of neurons functions. Let’s break it down step-by-step:

-

Data is fed into the input layer of the network. This might be image pixels, text data, or some other type of input.

-

Each input is assigned a weight by the network. Weights determine how much influence that input should have on the activation of a neuron.

-

The weighted inputs are summed together along with a bias value.

-

The summed weighted input passes through the neuron’s activation function, which converts it into the neuron’s output. Common activation functions include sigmoid, tanh, and ReLU.

-

The neuron’s output serves as input to the neurons in the next layer of the network. The process repeats, passing data from layer to layer.

-

As the data propagates through the network, the weights are continually adjusted based on an error signal through an optimization process like gradient descent. This is how the network learns.

-

After completing a pass through the network, an output is generated at the output layer. For example, a classification or predicted value.

By repeating this process over many iterations, the network can learn to make accurate predictions for new unseen data. The key is finding the right combination of network architecture, hyperparameter values, and training data.

A Brief History of Neural Networks

The history of artificial neural networks stretches back decades:

-

1943 – Warren McCulloch and Walter Pitts develop the first conceptual model for an artificial neural network. Their work showed how human brain neurons could be modeled by simple circuits.

-

1958 – Frank Rosenblatt builds the Perceptron, the first trainable neural network, inspired by the McCulloch-Pitts neuron model.

-

1960s – Neural network research stagnates due to limitations in compute power and datasets. Minsky and Papert publish a book critiquing the Perceptron, dampening interest.

-

1970s-1980s – Advances in backpropagation and multilayer perceptron networks renew interest in neural networks.

-

2000s – Lack of data and compute power again leads to a slowing of neural network research. Convolutional and recurrent neural networks introduced.

-

2010s – The availability of huge datasets and increased computational power from GPUs enable the development of deep learning using multilayer neural networks.

So while the core concepts have been around for decades, it’s really the recent advances in big data and compute power that have unlocked the full potential of neural networks and made them practical for complex real-world applications.

Real-World Applications of Neural Networks

Here are some examples of artificial neural networks used in the real world today:

-

Computer Vision – ANNs are used for image classification, object detection, image segmentation, and more. Applications include facial recognition, self-driving cars, and medical imaging diagnostics.

-

Natural Language Processing – ANNs can analyze unstructured text data for sentiment analysis, language translation, text summarization, and other NLP tasks.

-

Recommendation Systems – Services like YouTube, Amazon, and Netflix use ANNs to recommend content to users based on their interests and history.

-

Anomaly Detection – Neural networks can detect anomalous behavior in credit card transactions, network activity, and other systems. Useful for fraud prevention.

-

Time Series Forecasting – Recurrent neural networks are adept at learning sequence data like stock prices or weather patterns for time series forecasting.

In conclusion, artificial neural networks are computing systems modeled after the biological neural networks of animal brains. Their architecture consists of layers of interconnected neurons that transmit signals and learn. While conceived decades ago, ANNs have recently exploded in popularity due to big data and increased compute power. Today they enable many real-world applications in vision, language, recommendation systems, and more. While much progress has been made, neural networks and deep learning still have ample room to continue advancing in the years to come.

What is an Artificial Neural Network?

An artificial neuron network (ANN) is a computing system patterned after the operation of neurons in the human brain.

Perceptron Artificial Neural Network

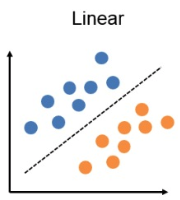

Perceptron is the simplest type of artificial neural network. This type of network is typically used for making binary predictions. A Perceptron can only work if the data can be linearly separable.

Neural Network In 5 Minutes | What Is A Neural Network? | How Neural Networks Work | Simplilearn

What are artificial neural networks?

Neural networks are sometimes called artificial neural networks (ANN) to distinguish them from organic neural networks. After all, every person walking around today is equipped with a neural network. Neural networks interpret sensory data using a method of machine perception that labels or clusters raw input.

What is neural network architecture?

Neural network architecture emulates the human brain. Human brain cells, referred to as neurons, build a highly interconnected, complex network that transmits electrical signals to each other, helping us process information. Likewise, artificial neural networks consist of artificial neurons that work together to solve problems.

What is a neural network based on?

The structures and operations of human neurons serve as the basis for artificial neural networks. It is also known as neural networks or neural nets. The input layer of an artificial neural network is the first layer, and it receives input from external sources and releases it to the hidden layer, which is the second layer.

What is artificial neural network in machine learning?

In machine learning, an artificial neural network is a mathematical model used to approximate nonlinear functions. Artificial neural networks are used to solve artificial intelligence problems. In the context of biology, a neural network is a population of biological neurons chemically connected to each other by synapses.